Are you also fascinated to get an inference from a face recognition model on jetson nano?

I fail to run TensorRT inference on Jetson Nano, due to PReLU activation function not supported for TensorRT 5.1. But, the PReLU channel-wise operator is available for TensorRT 6. In this blogpost, I will explain the steps required in the model conversion of ONNX to TensorRT and the reason why my steps failed to run TensorRT inference on Jetson Nano.

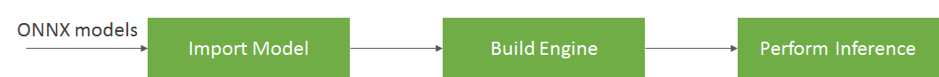

Our example loads the model in ONNX format i.e. arcface model of face recognition.

Now let’s convert the downloaded ONNX model into TensorRT arcface_trt.engine.

TensorRT module is pre-installed on Jetson Nano. The current release of TensorRT version is 5.1 by NVIDIA JetPack SDK.

import ONNX

If this command gives an error, then ONNX is not installed on Jetson Nano.

Follow the steps to install ONNX on Jetson Nano:

sudo apt-get install cmake==3.2

sudo apt-get install protobuf-compiler

sudo apt-get install libprotoc-dev

pip install –no-binary ONNX ‘ONNX==1.5.0’

Now, ONNX is ready to run on Jetson Nano satisfying all the dependencies.

wget https://s3.amazonaws.com/ONNX-model-zoo/arcface/resnet100/resnet100.ONNX

We are using Python API for the conversion.

import os

import tensorrt as trtbatch_size = 1

TRT_LOGGER = trt.Logger()

def build_engine_ONNX(model_file):

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.ONNXParser(network, TRT_LOGGER) as parser:

builder.max_workspace_size = 1 << 30

builder.max_batch_size = batch_size

# Load the ONNX model and parse it in order to populate the TensorRT network.

with open(model_file, 'rb') as model:

parser.parse(model.read())

return builder.build_cuda_engine(network)

# downloaded the arcface mdoel

ONNX_file_path = './resnet100.ONNX'

engine = build_engine_ONNX(ONNX_file_path)

engine_file_path = './arcface_trt.engine'

with open(engine_file_path, "wb") as f:

f.write(engine.serialize())

After running the script, we get some error “Segmentation fault core dumped”. After doing a lot of research we have found that there is no issue with the script. There are some other reasons why we are facing this problem. The reasons and explanations are discussed in the following paragraphs.

Jetson Nano is a ARM architecture-based device where TensorRT 5.1 is already pre-installed. The image which is written on SD card of NVIDIA Jetpack SDK does not includes TensorRT 6. It is possible to convert other models to TensorRT and run inference on top of it but it’s not possible with arcface. The arcface model cannot be converted because it contains a PRELU activation function which only supports TensorRT 6.

Model cannot be converted because we are unable to upgrade the TensorRT version from 5.1 to 6. So, unless and until NVIDIA provides us a Jetpack SDK OS image with the latest version of TensorRT 6 specifically the arcface model cannot be converted.

The installation file of TensorRT 6 is only supportable for AMD64 architecture which can’t be run on Jetson Nano because it is an ARM-architecture device. That’s why, the arcface ONNX model conversion is failed.

As soon as, NVIDIA Jetpack SDK releases OS image with TensorRT 6 the arcface ONNX model will get converted to TensorRT and we can run inference on top of it. I am all ears to know your thoughts/ideas to make it happen if NVDIA is taking its time to update jetpack SDK. We at DataToBiz always strive for latest tools & technologies to get ahead from our competitors. Contact for further details

About Author: Sushavan is a student of B.Tech in Computer Engg. at Lovely Professional University. He worked as an intern at DataToBiz for 6 months.

You must be logged in to post a comment.

Really enjoyed this blog article.Thanks Again. Cool. Agripina Breit

Im obliged for the article post. Want more. Theron Bossenbroek

You made some nice points there. I looked on the internet for the subject matter and found most individuals will agree with your site.

Hello! I could have sworn I’ve been to this blog before but after browsing through some of the post I realized it’s new to me. Anyways, I’m definitely happy I found it and I’ll be book-marking and checking back frequently!